What is an LLM

Think of an LLM as a very fancy “auto-complete” or “next word predictor”. Any LLM has 2 files:

A large file with billions of parameters for a neural network model (A neural network in simple terms is like a mesh of “neurons” similar to what we have in our human brains. And similar to human brains, neural networks are capable of learning from data and make decisions)

A tiny file with a few hundred lines of code to run the model

How LLMs are trained

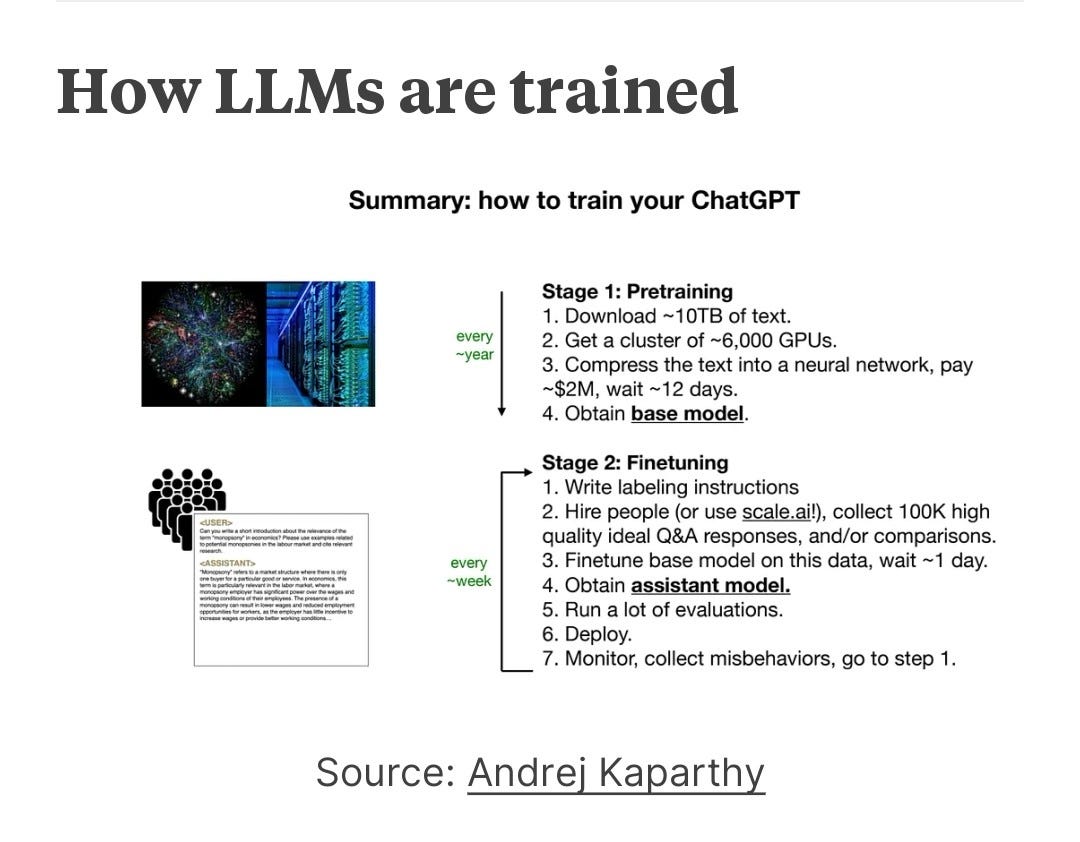

Training any LLM consists of 2 steps essentially: 1. Pretraining 2. Finetuning

Pretraining is a very resource intensive and expensive process (aka GPUs and hence one of the reasons behind the meteoric rise of Nvi*** :) )

Finetuning is much less expensive than pretraining and is basically refining the LLM for a specific usecase based on : 1. Prompt Engineering (this is basically writing prompts in a way that the LLM can give better outputs) 2. Proprietary data from a company’s database and adding it to a prompt (the fancy term for this is Retrieval Augment Generation or RAG)

So how are companies leveraging LLMs today?

Companies are either deploying proprietary LLMs (e.g. OpenAI, Microsoft) and using prompt engineering and RAG to leverage their proprietary databases. Some companies are also finetuning open source LLMs (e.g. Meta’s Llama) to suit their needs

Note: The views and opinions expressed in my Substack posts are my own and not those of any of my current, previous, or future employers.

I like the series. I have a tough time explaining LLMs or neural networks to folks i meet. Using some tips from this.